Blog

News

How TrustExam.ai Runs Educational Olympiads Online - Integrity at Scale Without Breaking Accessibility

Orken Rakhmatulla

Head of Education

Jan 8, 2026

Online olympiads expand access. Students can participate without travel, and organizers can reach more regions in a single season. But the moment rankings connect to prizes, scholarships, or admissions, integrity risks increase. Cheating shifts from individual attempts to organized support, impersonation, second-device coaching, and content leakage across groups.

This page explains how TrustExam.ai supports educational olympiads with a practical integrity program that balances fairness, scalability, and governance. The focus is not strictness for its own sake. The focus is evidence, consistent rules, and defensible outcomes.

The Challenge: Why Online Olympiads Need Exam Integrity

What makes olympiads different from regular exams

Olympiads are high-motivation assessments. Participants often prepare for months, rankings are public, and outcomes can shape scholarships or program admissions. That environment creates pressure and strong incentives to seek external help.

Common cheating patterns in olympiads (threat model)

In olympiads, the most frequent risks follow predictable patterns:

Impersonation or proxy test taking

Off-camera assistance from a coach, parent, or tutor

Second device use for messaging, calls, or AI tools

Screen mirroring or remote control tools

Answer sharing across groups during the same exam window

Appeals pressure after results are published

What organizers risk

Olympiad integrity is not only a technical issue. The organizer risks:

Reputation damage when finalists are challenged publicly

Loss of trust in rankings and certificates

Operational overload from manual review and appeals

Governance risk, especially when minors participate

Our Approach: Tiered Proctoring by Round (Qualifier to Finals)

Olympiads rarely have one single round. Integrity controls should match the stakes of each stage. TrustExam.ai supports tiered integrity profiles that organizers apply per round.

Qualifiers - accessibility first, risk-based monitoring

Early rounds prioritize accessibility and broad participation. The integrity goal is to reduce obvious abuse while keeping friction low, using risk-based monitoring and clear participant onboarding.

Semi-finals - stronger identity and review

Semi-finals narrow the pool. The integrity program increases identity assurance and strengthens review workflows, while keeping participant experience predictable.

Finals - highest assurance and audit-ready evidence

Finals define winners. Controls become stricter, evidence expectations are higher, and governance processes must be clear. The goal is to protect the final ranking and reduce disputes after results.

Expert Tip: “Tiering matters. A qualifier that blocks honest students harms fairness more than it helps integrity. Finals need defensible evidence, not extra friction for everyone.”

How We Deliver Online Olympiads End-to-End

Participant onboarding and readiness checks

Olympiad fairness starts with consistent conditions. We standardize:

readiness checks (camera, audio, browser, basic environment)

clear participation rules and allowed materials

support processes for peak-day start windows

This matters because onboarding failures often become appeals later.

Identity verification options (policy-driven)

Identity checks are configured based on policy, age group, and stakes. Some olympiads use light identity confirmation for qualifiers and stronger checks for finals. TrustExam.ai supports identity workflows that can scale without turning the process into bureaucracy.

Secure exam delivery (secure browser + device controls)

Depending on the round, organizers can apply controls such as:

secure browser modes and app restrictions

focus change monitoring and environment rules

device integrity signals (including VM indicators in higher-stakes rounds)

The value is simple: fewer bypass paths and fewer contested outcomes.

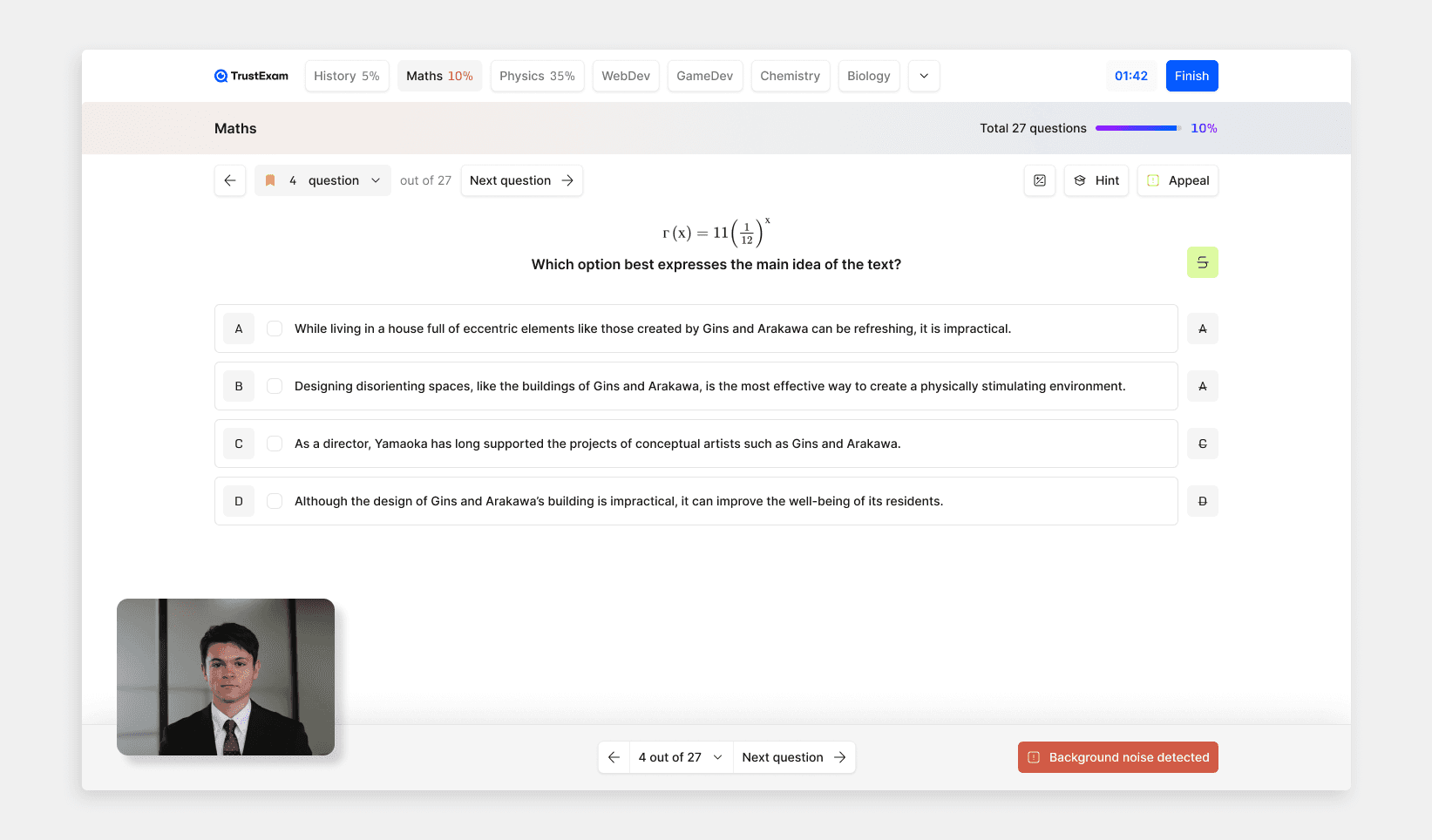

Multi-signal detection (video, audio, behavior, device)

Webcam-only approaches miss many real-world scenarios. TrustExam.ai combines signals to build an evidence trail:

video cues (face presence, multiple faces, suspicious movement)

audio indicators (where allowed by policy)

behavioral patterns (timing anomalies, navigation behavior)

device signals (restricted tools, VM indicators, environment events)

The output is not automatic punishment. It is a structured risk signal and evidence timeline that reviewers can defend.

Review workflow and evidence packets for the jury

After the round, the jury needs speed and consistency. Instead of hours of raw recordings, TrustExam.ai produces:

time-coded evidence timelines

short review snippets aligned with the rulebook

consistent evidence packets for disputes and appeals

Real Olympiad Cases We Delivered (Examples)

Below are representative olympiad deployments we have supported. Exact names and detailed metrics can be shared under NDA. The value here is the delivery pattern and what changed for the organizer.

National school olympiad - broad qualifiers, strict finals

Challenge: Maximum accessibility across regions in qualifiers, strong assurance in finals.

Delivery model: Tiered profiles by round, stronger identity checks in finals, standardized evidence packets for jury decisions.

What changed: Fewer escalations and more consistent decisions due to time-coded evidence.

STEM olympiad by a university consortium - standardized fairness

Challenge: Multiple institutions co-own the olympiad, fairness must be consistent across campuses.

Delivery model: Unified rule triggers, shared review workflow, consistent evidence format for all participating institutions.

What changed: A single integrity standard without forcing members to change their LMS or internal processes.

Scholarship-linked olympiad - higher impersonation risk

Challenge: Strong incentive for proxy participation and heavy appeals pressure.

Delivery model: Stronger identity assurance for decisive rounds, tighter device controls, published appeals workflow.

What changed: Appeals became process-driven because outcomes were tied to documented evidence.

Multi-region language olympiad - minors and governance

Challenge: Minors participation and strict privacy expectations.

Delivery model: Transparent notices, role-based access to materials, retention policy, human oversight.

What changed: Reduced governance risk and improved trust with schools and parents.

Results and What Changed for Organizers

Operational outcomes

Review workload becomes manageable through risk-based prioritization

Decisions become consistent across regions and rounds

Peak-day operations improve with readiness checks and support playbooks

Integrity outcomes

Higher confidence in finalists and published rankings

Defensible decisions with structured evidence timelines

Reduced disputes driven by “unclear rules” scenarios

Participant outcomes

Broad access in early rounds

Clear expectations and predictable experience

Fair governance for minors and vulnerable groups

Comparison Table: Olympiad Integrity Methods

Method | Evidence strength | Cost | Scalability |

|---|---|---|---|

Honor code only | Low | Low | High |

Live proctors only | Medium | High | Low |

Webcam recording only | Medium | Medium | Medium |

Secure browser only | Medium-High | Medium | High |

Multi-signal + risk scoring + evidence timeline | High | Medium | High |

Why evidence strength matters for appeals

In olympiads, disputes often happen after results. Evidence timelines make decisions explainable. That protects both the jury and honest participants.

Implementation Checklist for Olympiad Organizers

Step | Owner | Deliverable |

|---|---|---|

Define integrity tiers by round | Olympiad director | Tier policy and rulebook |

Set identity checks and exceptions | Operations + legal | Identity and exceptions policy |

Configure proctoring profiles | Integrity lead | Qualifier/semi/final profiles |

Prepare onboarding and readiness checks | Operations | Setup guide + pre-check flow |

Run a pilot round | Program lead | Pilot report + calibration |

Launch monitoring and support | Support lead | Incident response playbook |

Finalize review and appeals workflow | Jury secretary | Evidence pack + appeal template |

Define retention and access governance | Legal + audit | Retention and access policy |

Governance: Privacy, Fairness, Retention, and Appeals

Many olympiad participants are minors. Governance must be designed upfront:

transparent notices and clear rules

role-based access to recordings and evidence

defined retention schedules and deletion workflows

human oversight for high-impact decisions

published appeals process with clear timelines

For alignment, organizers often reference established sources like NIST Digital Identity Guidelines, university academic integrity policies, and peer-reviewed research on remote assessment integrity. The goal is not to quote documents. The goal is to follow recognized principles: proportionality, transparency, and oversight.

Next Step: Run a Pilot Olympiad With TrustExam.ai

A pilot should validate three things: participant experience, operational stability under load, and defensible evidence for jury decisions. Start with one round, calibrate the integrity profile, then scale to decisive rounds with stronger controls.

Explore:

TrustExam.ai online proctoring platform: https://trustexam.ai

AI online proctoring: https://trustexam.ai/online-proctoring

Proctoring for universities and schools: https://trustexam.ai/who-we-help/education

Proctoring for government exams: https://trustexam.ai/who-we-help/government

FAQ: Online Olympiad Proctoring

Do olympiads always need strict proctoring?

No. Tiering is essential. Keep qualifiers accessible and raise assurance for finals.

What is the most common failure in online olympiads?

Relying on webcam-only monitoring. Many real risks happen on-device or through group coordination.

How do you handle weak internet fairly?

Use readiness checks, published support rules, and defined retake policies. Avoid ad-hoc decisions.

How do you reduce disputes after results?

Publish an appeals workflow upfront and use standardized evidence packets with time-coded timelines.

How do you start if this is your first online olympiad?

Run one pilot round, calibrate thresholds, validate operations under load, then expand by stage.

Orken Rakhmatulla

Head of Education

Share

News

Secure Testing System for High-Stakes Exams - TrustExam.ai Testing Platform

Jan 12, 2026

Tutorials

Integrate TrustExam.ai into Your Existing LMS (Moodle, Canvas, Custom Portals): A Practical Guide

Jan 12, 2026

Insights

Tech Orda IT Certification Assessments in Kazakhstan: How TrustExam.ai Helped Deliver Secure, Scalable Remote Testing

Jan 8, 2026