Blog

News

Secure Testing System for High-Stakes Exams - TrustExam.ai Testing Platform

Learn how TrustExam.ai’s testing system delivers secure, scalable exams online or in testing centers with device integrity controls, analytics, and audit-ready reporting.

Orken Rakhmatulla

Head of Education

Jan 12, 2026

Most organizations think the hardest part of assessment is building good questions. In practice, the hardest part is running exams at scale without losing credibility. The moment exams affect admissions, licensing, hiring, or public services, the testing platform becomes part of the governance process. It must be stable under load, predictable for candidates, and defensible for auditors.

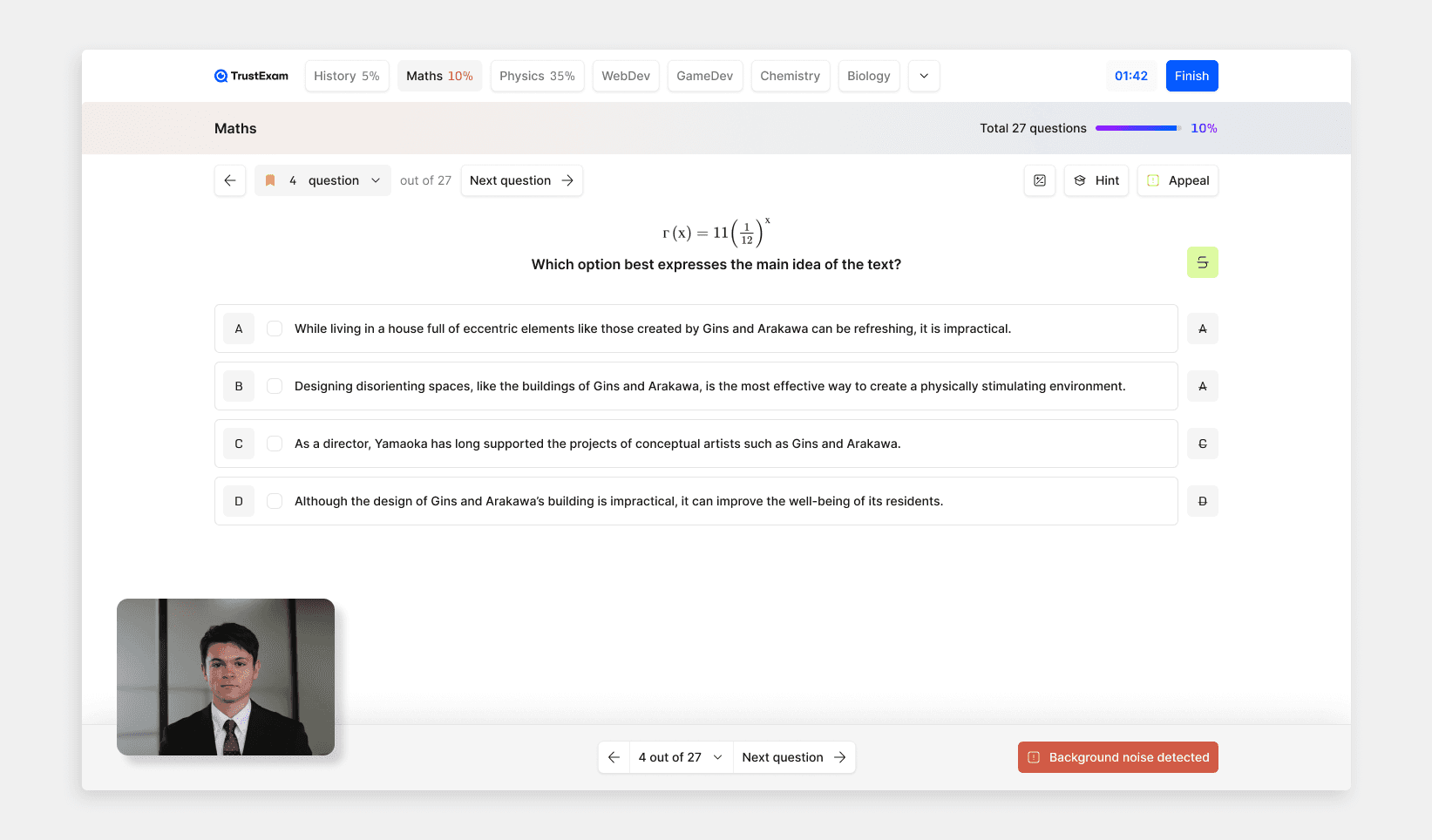

TrustExam.ai’s testing system is built for that reality. It supports two common delivery models:

Run exams directly on the TrustExam testing platform (end-to-end delivery, reporting, scheduling).

Integrate into your existing LMS or portal and keep your current exam flow while adding integrity controls and reporting.

The goal is not “more strictness.” The goal is a testing environment you can trust.

1) What a “testing system” really means in high-stakes assessment

A testing system is more than a quiz engine. In regulated or public-facing exams it must handle:

Candidate lifecycle (registration, eligibility, attempts, identity rules)

Delivery under constraints (time windows, limited seats, peak concurrency)

Security and integrity (device integrity, environment controls, evidence)

Operational workflows (support, incident handling, appeals, auditing)

Reporting (per attempt, per center, per cohort, anomaly patterns)

If one of these fails, results become questionable even if questions are strong.

2) Core capabilities of the TrustExam testing platform

Exam delivery built for scale

The platform supports structured exam creation, scheduling, attempt rules, and consistent candidate experience across thousands of sessions. This matters when you run national programs, admission campaigns, or multi-campus certification.

Flexible deployment: online, offline, hybrid

Organizations often need different formats:

fully remote online testing

controlled testing centers

hybrid models (remote for qualifiers, centers for finals)

A single platform that supports all three reduces operational fragmentation and improves reporting consistency.

Built-in integrity controls at the platform level

When integrity is bolted on later, it becomes a collection of exceptions. We approach it as a platform layer.

Typical integrity building blocks include:

secure exam environment controls (policy-driven)

device and environment integrity monitoring (including VM and suspicious setup detection)

configuration integrity checks to reduce compromised machine usage

event timelines for review and audit

The key is evidence and consistency. Decisions should not depend on who reviewed the session.

3) Secure Testing Center Software: when you run physical centers

Many high-stakes programs still rely on physical testing centers. The risk profile changes, but it does not disappear. Centers face issues like compromised machines, shared accounts, uncontrolled peripherals, and inconsistent operator actions.

TrustExam’s testing center software is designed for controlled environments and includes capabilities such as:

device integrity and VM detection

configuration integrity monitoring

automatic blocking of compromised machines

per-seat statistics and anomaly detection

online application intake, automated booking, and seat allocation

Why this matters: centers become more scalable when operations are standardized and integrity decisions are traceable.

4) Proctoring and testing: how the layers work together

A mature assessment setup separates responsibilities:

The testing system delivers the exam, enforces attempt rules, and manages the candidate lifecycle.

The integrity layer monitors signals, produces evidence, and supports review.

The governance layer defines policies, retention, access, and appeal rules.

TrustExam.ai supports these layers without forcing you into a single rigid workflow. Some customers run tests entirely in our platform. Others keep Moodle, Canvas, or custom LMS for questions, while using TrustExam for integrity, evidence, and reporting.

5) Reporting that supports real decisions

A testing platform earns trust through what it can prove. Reporting should support at least three audiences:

Operations teams

start success rate, device readiness, drop-offs, support load

center utilization, seat-level anomalies, incident trends

Review and audit teams

time-coded evidence timeline per attempt

rule-based flags and event context

consistent reviewer workflow

Leadership and regulators

integrity posture over time

appeals volume and resolution outcomes

compliance and access governance overview

If reporting is only “a video recording,” teams drown in manual review. The platform should reduce review workload, not create it.

6) Governance: privacy, fairness, and appeals are not optional

High-stakes assessment systems must be defensible, not only “secure.”

A practical governance setup includes:

clear candidate notices and policies

retention rules for logs and recordings

role-based access control (who can view what and when)

exceptions handling (connectivity, accessibility accommodations)

human oversight for high-impact decisions

documented appeals workflow tied to evidence

This protects both the institution and honest candidates.

7) Where the TrustExam testing system fits best

The testing platform is designed for organizations that run:

university entrance and admissions exams

session exams across a network of universities

national or government qualification tests

professional licensing and certification

corporate compliance and safety knowledge testing

educational olympiads with high incentive and public rankings

Common trigger: the exam volume grows, the reputation risk rises, and manual control stops scaling.

8) How teams typically roll it out

A realistic rollout avoids disruption:

Map exam types and stakes (threat model and operational model)

Run a pilot with real devices and realistic conditions

Calibrate policies and integrity thresholds

Define review and appeals workflow before scaling

Expand to larger cohorts and more exam types

This approach reduces surprises during peak exam windows.

Orken Rakhmatulla

Head of Education

Share

News

Secure Testing System for High-Stakes Exams - TrustExam.ai Testing Platform

Jan 12, 2026

Tutorials

Integrate TrustExam.ai into Your Existing LMS (Moodle, Canvas, Custom Portals): A Practical Guide

Jan 12, 2026

Insights

Tech Orda IT Certification Assessments in Kazakhstan: How TrustExam.ai Helped Deliver Secure, Scalable Remote Testing

Jan 8, 2026