Blog

Insights

Tech Orda IT Certification Assessments in Kazakhstan: How TrustExam.ai Helped Deliver Secure, Scalable Remote Testing

Learn how TrustExam.ai supported Astana Hub’s Tech Orda program with scalable AI-proctored certification exams, identity checks, and defensible review workflows.

Orken Rakhmatulla

Head of Education

Jan 8, 2026

Tech Orda is one of Kazakhstan’s most visible IT talent programs. Run by Astana Hub with support from the Ministry of Digital Development, it funds training for citizens aged 18 to 45 and channels learners into accredited private IT schools and courses. astanahub.com

Training at scale creates a new operational reality: exams are no longer a small classroom event. They become a nationwide assessment pipeline. If integrity fails, the consequences are not only academic. They affect public trust, outcomes of public funding, and confidence in workforce readiness.

This article explains how TrustExam.ai supported Tech Orda certification-style assessments with evidence-based proctoring, exam-day operations, and governance that works for high-stakes, large-scale remote testing.

Why Tech Orda Assessments Need Strong Exam Integrity

Tech Orda is designed to expand access to IT education across the country. That means participants differ widely in location, connectivity, devices, and testing environment. astanahub.com

From an integrity standpoint, that mix creates three predictable pressures:

High incentive

Passing impacts a learner’s trajectory inside a funded program and can influence career opportunities.

Distributed environments

Exams happen outside controlled classrooms. Standard supervision is not available.

Scale

When participant volume grows, manual monitoring does not scale linearly. Review teams burn out, and decisions become inconsistent.

A credible certification process needs consistent rules, defensible evidence, and an appeals workflow that does not collapse under load.

The Threat Model: What Actually Breaks Remote Certification Exams

In remote IT certification assessments, the most common integrity risks are not exotic. They are operationally simple and repeatable:

Impersonation (proxy test-taking using someone else’s identity)

Second-device support (messaging, calls, AI tools, or a helper off-camera)

Screen mirroring and remote control tools

Virtual machines or controlled environments used to bypass restrictions

Content leakage between groups if exam windows are poorly designed

Appeals pressure when results affect progression or funding outcomes

The main lesson: integrity is not a single feature. It is a system of controls plus governance.

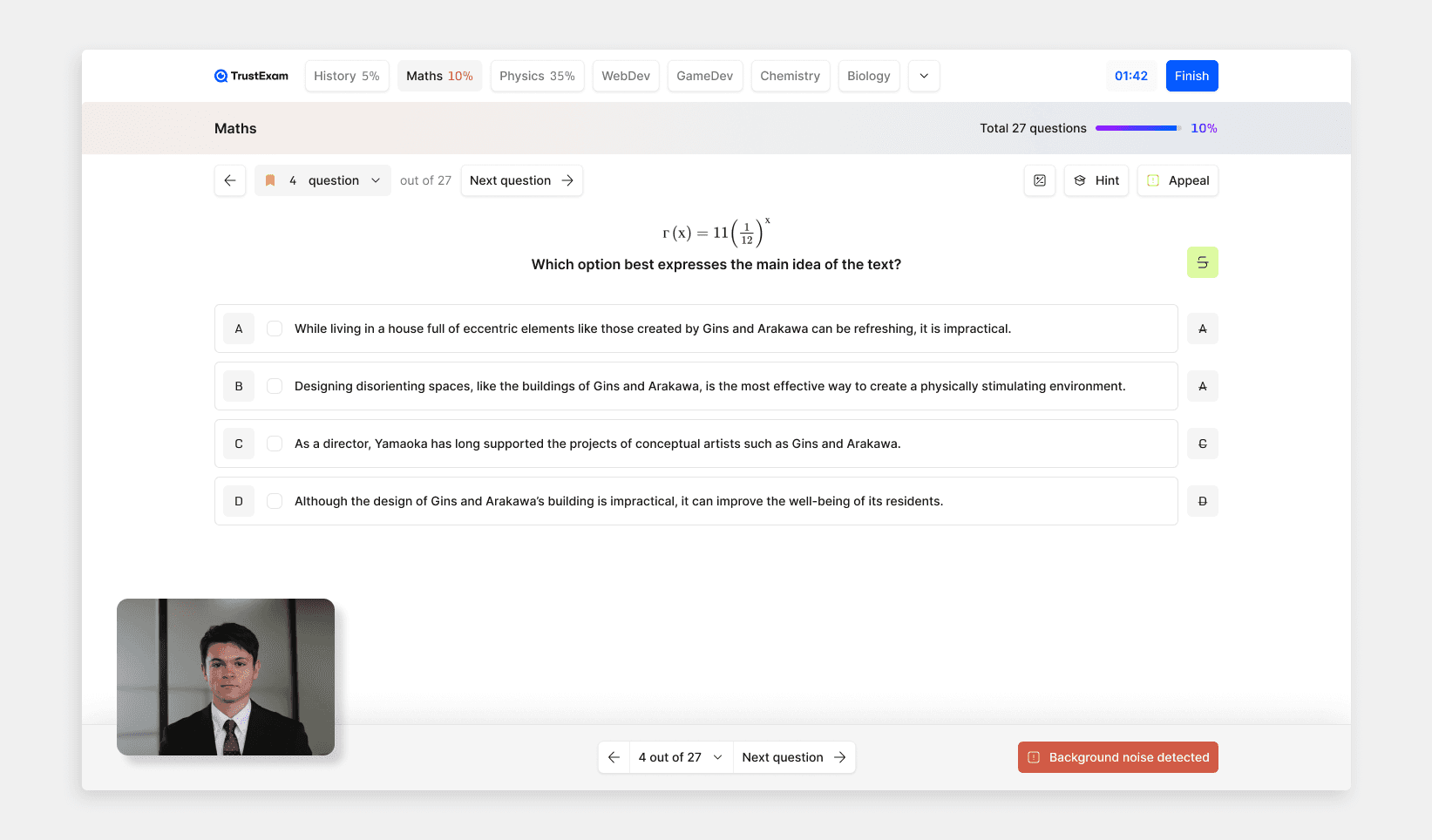

What TrustExam.ai Delivered for Tech Orda Style Certification

On our side, the objective was clear: enable remote assessments that are scalable and audit-friendly, without turning every exam into a high-friction experience.

TrustExam.ai’s public case summary notes that we helped certify 3,000+ IT specialists via secure, AI-proctored testing for the Tech Orda context. oqylyq.kz

Below is the delivery model behind that result.

1) Identity Assurance That Matches the Stakes

Impersonation is the fastest way to destroy trust in certification. The solution is not “one more selfie.” The solution is a repeatable identity flow that works across devices and regions.

A practical identity layer includes:

pre-exam identity checks aligned to policy

consistent entry rules (what is accepted, what triggers escalation)

evidence capture that supports human review if a case is contested

For government-adjacent programs, the value is governance: decisions can be defended when stakeholders ask “why was this participant flagged?”

2) Secure Exam Delivery That Reduces Bypass Paths

Remote assessments fail when the exam environment is porous. For high-stakes certification, you want to reduce the obvious bypass routes without punishing normal users.

Typical controls include:

secure browser delivery modes (where appropriate)

environment and device integrity signals

clear rules for allowed tools (especially if the assessment includes coding tasks)

This matters because many disputes are not about cheating. They are about unclear rules. Clear exam delivery rules prevent operational chaos.

3) Multi-Signal Detection and Evidence Timelines (Not “Blind Automation”)

A common mistake in remote proctoring is treating webcam video as the whole truth. In certification exams, risks often happen off-camera or on-device.

TrustExam.ai is built around multi-signal monitoring and evidence-based reporting:

video signals (presence, suspicious framing, multiple faces)

behavioral patterns (timing anomalies, interaction patterns)

device/environment events (restricted behaviors, integrity indicators)

post-exam analytics that convert raw data into review-ready evidence

The key is the output: not a vague suspicion, but an evidence timeline that a reviewer can understand and justify.

This matters because high-stakes programs always get appeals. Evidence timelines turn arguments into a process.

4) Review Operations That Scale Beyond “Live Proctors”

At national scale, “put a human on every session” is rarely sustainable. Even if budgets allow it, consistency becomes the problem.

A scalable model looks like this:

automated risk scoring to prioritize sessions for review

standardized reviewer playbooks (what counts as a violation, what is inconclusive)

evidence packets that reduce subjectivity between reviewers

In practice, this prevents a common failure mode: different reviewers making different decisions for the same behavior.

5) Governance: Privacy, Retention, Fairness, Appeals

With public programs, governance is not optional. It is the core requirement.

A compliance-friendly assessment program should define:

privacy notices and participant consent flows (where required)

retention policy (how long recordings/logs exist, who can access them)

role-based access control (reviewers vs administrators vs auditors)

fairness and exceptions (connectivity issues, disability accommodations)

human oversight for high-impact decisions

appeals workflow with documented timelines and evidence disclosure rules

Many organizations align their policies to reputable guidance such as NIST digital identity principles and established university academic integrity frameworks, then adapt them to local legal requirements. (Mentioned here as a governance approach, not as a compliance claim.)

Comparison: Methods to Protect Remote Certification Exams

Method | Evidence strength | Cost at scale | Scalability | Typical failure mode |

|---|---|---|---|---|

Honor code only | Low | Low | High | No enforceability, credibility loss |

Live proctors only | Medium | High | Low | Expensive, inconsistent decisions |

Webcam recording only | Medium | Medium | Medium | Misses on-device and coordinated help |

Secure browser only | Medium-High | Medium | High | Does not solve impersonation alone |

Multi-signal + evidence timeline + risk-based review | High | Medium | High | Requires clear governance and playbooks |

What This Case Means for Other Governments and Certification Bodies

Tech Orda is a strong example of a wider pattern: public or public-adjacent programs are moving to large-scale training and remote assessment. As they scale, integrity becomes a “system property,” not a feature.

If an agency is responsible for:

professional certifications

workforce training exams

licensing pre-tests

scholarship or grant-linked assessments

…the same principles apply:

tier controls by stakes

design identity flows that can be defended

prioritize evidence timelines over raw recordings

build an appeals-ready governance layer from day one

Where TrustExam.ai Fits

TrustExam.ai is an AI proctoring and exam integrity platform designed for high-stakes and large-scale exams, with evidence-based reporting, secure exam delivery options, and audit-friendly workflows. trustexam.ai+1

If you are building a nationwide certification program, we typically start with:

threat model workshop

pilot (focused on operations + evidence quality)

scaling plan with governance, review playbooks, and reporting

You can start with the TrustExam.ai online proctoring platform and map it to your certification workflow. trustexam.ai

FAQ

How do you detect impersonation in remote certification exams?

Combine identity checks with behavioral and device signals, then confirm using evidence timelines and human review for decisive cases.

Is AI proctoring enough by itself?

No. Technology reduces risk, but governance decides outcomes. Clear rules, retention, access control, and appeals matter as much as detection.

How do you keep assessments fair for participants with weak internet?

Define exception rules upfront, use readiness checks, and keep decisions process-driven. Avoid ad-hoc retakes and inconsistent thresholds.

What is the fastest way to lose trust in a certification program?

Inconsistent decisions and undocumented enforcement. If outcomes cannot be explained with evidence, disputes become political.

Orken Rakhmatulla

Head of Education

Share

News

Secure Testing System for High-Stakes Exams - TrustExam.ai Testing Platform

Jan 12, 2026

Tutorials

Integrate TrustExam.ai into Your Existing LMS (Moodle, Canvas, Custom Portals): A Practical Guide

Jan 12, 2026

Insights

Tech Orda IT Certification Assessments in Kazakhstan: How TrustExam.ai Helped Deliver Secure, Scalable Remote Testing

Jan 8, 2026