Blog

Tutorials

Integrate TrustExam.ai into Your Existing LMS (Moodle, Canvas, Custom Portals): A Practical Guide

Nurali Sarbakysh

CEO

Jan 12, 2026

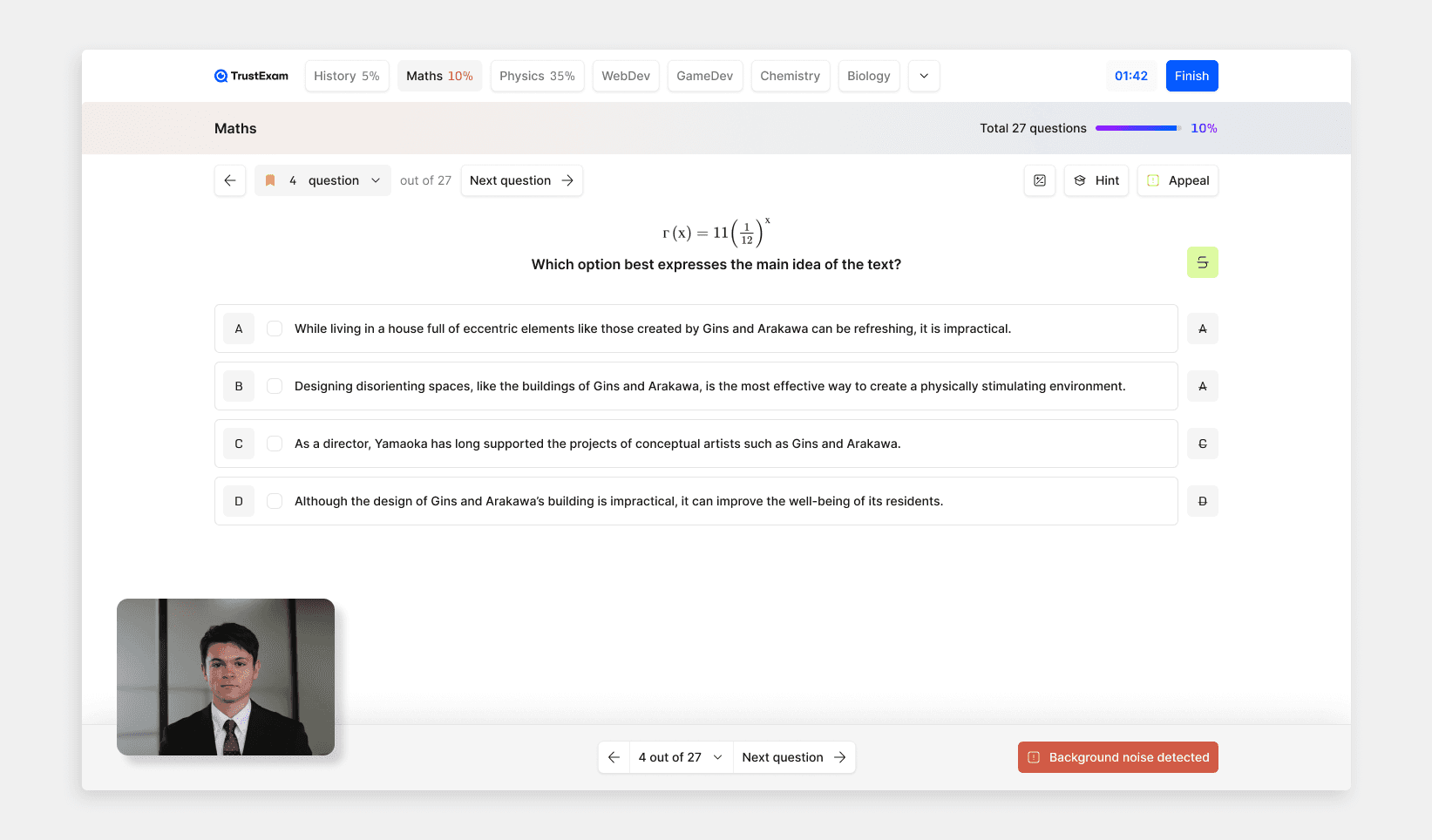

If you already run exams inside an LMS or a custom testing portal, the fastest path to secure proctoring is to keep your platform as the primary UI and embed TrustExam proctoring in the background. That is exactly how TrustExam’s integration flow is designed: backend API creates an exam session and returns a token, then frontend SDK mounts proctoring inside your exam page, and webhooks + AI reports bring results back into your system.

1) What “integration” means in practice

Most teams do not want to migrate content, rebuild exams, or force users into a separate proctoring portal. Integration usually means:

Your LMS stays in control: logins, exam rules, questions, timers, grading, attempts.

TrustExam handles proctoring: camera, screen share, audio monitoring, identity checks, evidence collection, and integrity analytics.

You get results back: via

report_url, webhooks, and the AI attempt report API.

This approach works for Moodle, Canvas, in-house LMS, government portals, and any web testing system that can call an API and load a JS script.

2) The two-layer integration model (backend + frontend)

TrustExam’s recommended integration consists of two layers:

Layer A: Backend (server-to-server)

Your backend registers:

the assignment (exam definition and settings)

the student (test-taker identity)

optional session attempt metadata

TrustExam returns:

external_session.token(used by the frontend SDK)report_url(direct link to the attempt report in TrustExam UI)

Layer B: Frontend (browser/client)

Your exam page loads the Proctoring SDK, initializes it using the token, then starts and finishes attempts from your UI.

3) Backend API: create an exam session and get the token

Endpoint and authentication

TrustExam’s backend endpoint for creating an external session is:

POST https://api.trustexam.ai/api/external-session/assignment.json?api_token=YOUR_API_TOKEN

You can also pass the token in headers:

X-Authorization: YOUR_API_TOKEN

The response you should store

The response includes:

external_session.tokenreport_url

You typically store these with your attempt record so your admin panel can always open the report, and your frontend can always initialize proctoring.

Required JSON structure (what to send)

At minimum, your payload includes:

assignment(required)

assignment.external_id(unique exam identifier in your system)assignment.nameassignment.settings(where proctoring settings and webhooks live)

student(required)

student.external_id(unique user identifier in your system)student.namestudent.email(recommended)

application(required)

"browser"(default),"tray", or"desktop"

session_data(optional)

session_data.external_id(your internal attempt ID; helpful for reconciling webhooks and reports)

Why this matters for LMS teams

This structure maps cleanly onto common LMS objects:

Moodle/Canvas “quiz” or “assignment” →

assignment.external_idUser record →

student.external_idAttempt record →

session_data.external_id

It also lets you update settings without creating duplicates because TrustExam uses the same assignment.external_id and student.external_id to update records when sent again.

4) Frontend SDK: embed proctoring into your exam page

Load the SDK

Add the TrustExam SDK script to your exam page:

Initialize inside your exam container

You mount the proctoring UI into a container (like .wrapper) and pass the token returned from the backend:

Key parameters include:

selector: where to mount UIlanguage:"en","ru", or"kz"token:external_session.token

Start and finish attempts from your own UI

TrustExam provides core methods:

And helpers:

Proctoring.isFinished()Proctoring.isCanStartNewAttempt()

This is important for LMS flows where attempts can be limited, restarted, or resumed.

5) Content Protect: protect question content in the browser

If your main concern is content leakage (screenshots, screen recording, remote-control capture), TrustExam supports Content Protect in the SDK:

Backend: set

content_protect: trueinassignment.settings.proctoring_settingsFrontend: pass

protectorin initialization (define which element to protect)

The docs describe Content Protect as preventing screenshots/screen recording of protected content and remote-control tools capturing the exam area.

6) Webhooks + AI reports: get results back into Moodle, Canvas, or your portal

Webhooks (start and finish)

If you configure webhooks in assignment.settings.webhook, TrustExam sends HTTP POST calls when an exam starts and finishes:

webhook.start_url(exam begins)webhook.finish_url(exam ends)

Webhooks include:

attempt identifiers (including your

session_data.external_idif you provided it)status and timestamps

basic metadata

optional fields from

webhook.post,webhook.query, andwebhook.headers

This is how you:

sync proctoring status into your gradebook

trigger review workflows

notify instructors, coordinators, or HR systems

report_url for admins and reviewers

The backend response includes report_url which opens the attempt report in TrustExam UI. Most teams embed this link into:

instructor dashboard

admin audit panel

appeal review workflow

AI Attempt Report API

If you need structured results for dashboards or automation, TrustExam provides an AI report endpoint:

GET https://trust-rating.trustexam.ai/api/v1/actions/reports/ai/ATTEMPT_ID?api_token=API_KEY

The docs describe outputs like:

overall risk score

list of detected violations

timeline of suspicious events

recommendations for manual review

This is useful when you want consistent triage at scale, without turning proctoring into “automatic punishment”. Keep human oversight in your policy.

7) Proctoring settings you should decide early (and why)

Most failed integrations are not technical. They fail because settings are unclear. Here are the most integration-relevant parameters from the TrustExam settings reference.

Camera and screen capture

You can separately enable streaming vs recording/uploading:

main_camera_record(default true),main_camera_upload(default false)second_camera_record(default true),second_camera_upload(default false)screen_share_record(default true),screen_share_upload(default false)

Why it matters:

“Record” supports live monitoring signals.

“Upload” controls evidence retention and storage footprint.

Identity and verification

photo_head_identity(0 off, 1 on)video_head_identity(ongoing video-based identity verification)id_verification(ID document capture)

Why it matters:

This is the core layer for preventing impersonation and supports stronger auditability for appeals.

Audio and second microphone options

noise_detector,speech_detectorsecond_microphone_record / second_microphone_uploadsecond_microphone_label(regex for allowed device names)

Why it matters:

Audio policies vary by country and institution. Decide consent language and retention rules before enabling uploads.

Head/face tracking and object detection

Settings include sensitivity, comparison intervals, and whether analysis runs client-side or server-side:

head_tracking_clienthead_tracking_server,head_tracking_server_post,head_tracking_server_realtimeobject_detectand related thresholds/categoriesface_landmarker(eye/gaze tracking)

Why it matters:

Use these as a risk signal, not a single point of truth.

Align thresholds with your exam type: high-stakes licensing vs low-stakes quizzes.

Additional controls

proctoring_mobile_restrict(block exams on mobile)proctoring_fallback_allow(allow exam start if some features fail)connection and initialization timeouts

Why it matters:

These settings define your “graceful degradation” policy.

For high-stakes exams, you may want stricter start requirements.

8) A reference architecture (recommended pattern)

Here is a simple, production-friendly pattern:

Your LMS backend creates a TrustExam external session right before the exam starts.

Your backend stores:

external_session.tokenreport_urlyour own attempt ID in

session_data.external_id

Your exam page loads the SDK and initializes using the token.

When the learner clicks “Start”, your UI calls

Proctoring.start().On submit, your UI calls

Proctoring.finish().TrustExam calls your

finish_urlwebhook with attempt metadata.Your backend pulls:

report_urlfor adminsAI report via API for analytics and triage (when needed)

9) Implementation checklist (what your team should plan)

Week 1: Technical integration

Backend session creation (assignment + student + application + optional session_data)

Frontend SDK mount and start/finish

Basic error handling and retries (timeouts, user permissions)

Week 2: Policy and governance

Consent and privacy notices

Retention and access controls for video/screen/audio

Appeals workflow: who can view reports, who decides outcomes

Week 3: Scale and operations

Webhooks into your grading workflow

AI report integration for triage

Monitoring: webhook delivery, token lifecycle, failure rates

10) Common integration pitfalls (and how to avoid them)

Token created too early

Create sessions close to exam time. Store tokens securely.

No stable IDs

Use consistent

assignment.external_idandstudent.external_id. TrustExam updates records if you resend the same IDs.

Unclear proctoring policy

Decide what happens when a camera fails, screen share is denied, or audio is blocked. Use

proctoring_fallback_allowintentionally.

Results not routed back

Without webhooks, your LMS team ends up checking a separate UI. Configure

start_urlandfinish_urlearly.

Too strict settings for low-stakes exams

Start with a moderate policy. Tighten later based on observed behavior and appeals volume.

11) Where TrustExam.ai fits

If you want your Moodle, Canvas, or custom LMS to stay as the primary exam experience, TrustExam’s integration model is built for exactly that: backend token issuance, frontend embed, webhook-driven workflows, and audit-friendly reports.

If you share:

your LMS type (Moodle/Canvas/custom),

your exam types (midterms, admissions, olympiads, certification),

and your must-have compliance constraints,

you can map the right proctoring settings profile (identity, screen, audio, evidence retention) and ship an integration that scales without creating a review bottleneck.

Nurali Sarbakysh

CEO

Share

News

Secure Testing System for High-Stakes Exams - TrustExam.ai Testing Platform

Jan 12, 2026

Tutorials

Integrate TrustExam.ai into Your Existing LMS (Moodle, Canvas, Custom Portals): A Practical Guide

Jan 12, 2026

Insights

Tech Orda IT Certification Assessments in Kazakhstan: How TrustExam.ai Helped Deliver Secure, Scalable Remote Testing

Jan 8, 2026