Blog

News

How TrustExam Supports a University Network via LMS Integrations and a Direct Exam Integrity Platform

Orken Rakhmatulla

Head of Education

Jan 8, 2026

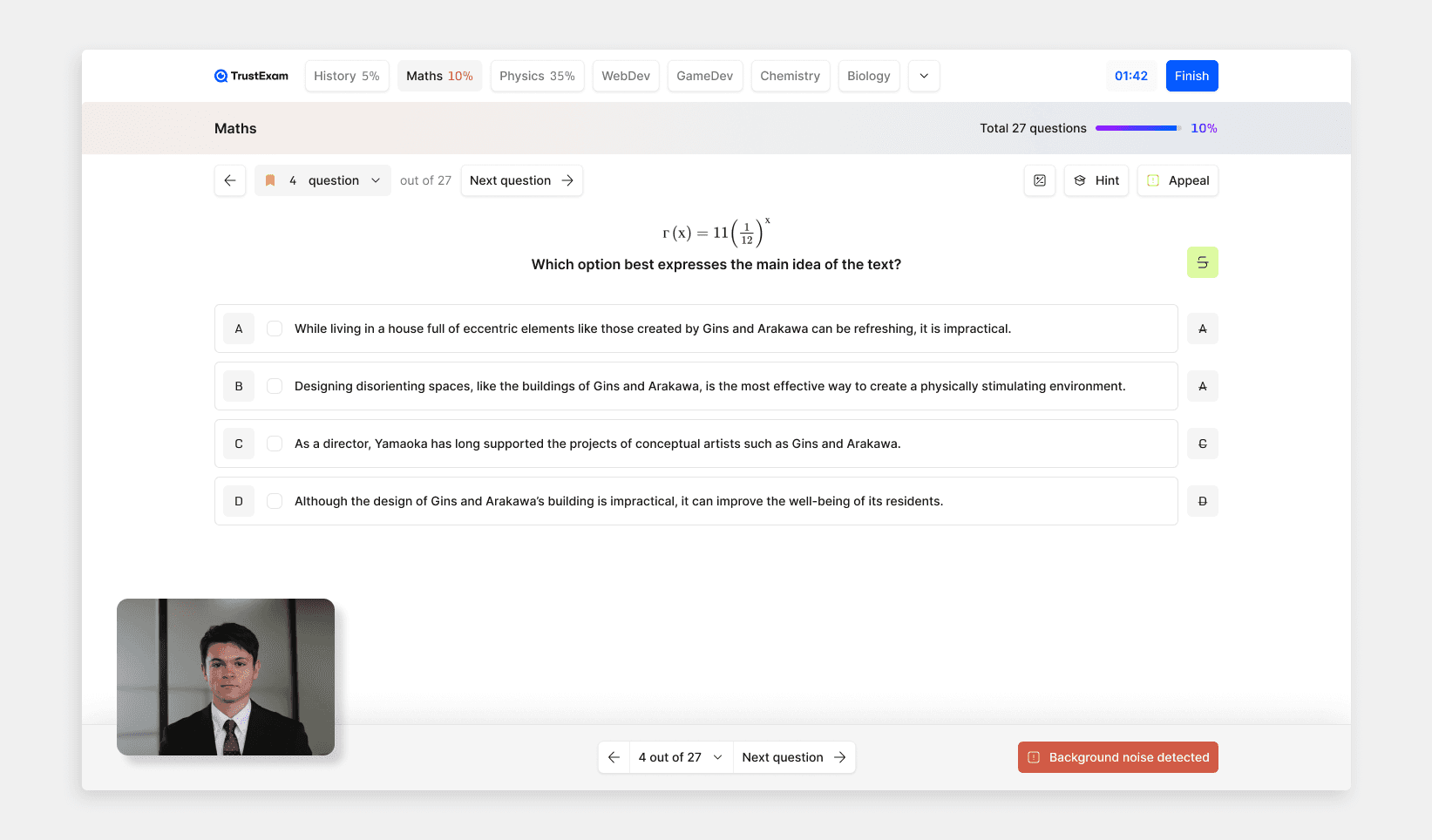

How TrustExam Supports a University Network via LMS Integrations and a Direct Exam Integrity Platform - 1.5M Exams Delivered

Over the last few years, I have learned a simple rule about university assessment at scale. The risk is not only cheating. The risk is inconsistency. When different campuses run exams with different rules, different tools, and weak evidence for appeals, the entire network pays the price in reputation and operational chaos.

This case study explains how TrustExam.ai supported a network of universities using two connected delivery modes: integrations with existing LMS platforms (Moodle, Canvas, proprietary LMS, and other education systems) and a direct testing plus proctoring workflow inside TrustExam for high-stakes scenarios. Across these university use cases, we have delivered approximately 1.5 million exams in production, including regular exam sessions, retakes, and admission campaigns.

[IMAGE: A university assessment coordinator reviewing a dashboard that shows multiple campuses, exam schedules, and sessions queued for review. Caption: "A single operational view across a university network reduces chaos during peak exam periods." Style: documentary/real-life]

The situation

The university network had a common set of challenges:

Exams were split across different systems. Some lived in the LMS. Others ran in standalone tools.

Integrity rules differed by campus and faculty. That created fairness concerns.

As remote and blended formats grew, so did the risk of impersonation, off-camera assistance, and second-device coaching.

Appeals took longer because evidence was inconsistent or not defensible.

Workload increased for academic staff and committees during peak session weeks and admission windows.

The goal was practical. Strengthen integrity and auditability without breaking established academic processes.

The approach: two delivery modes, one integrity standard

We implemented a model that keeps teaching workflows intact, while standardizing integrity controls and evidence across the network.

LMS-based delivery with TrustExam as the integrity layer

Where the network already used a Learning Management System, we integrated TrustExam as an integrity and reporting layer on top of the familiar student and instructor experience.

Typical LMS and education systems in this model:

Moodle

Canvas

University-owned and network-owned proprietary LMS platforms

Other education portals and testing systems where integration points and session events are available

A key operational reality is that large networks often run more than one LMS. A main campus may standardize on one platform, while affiliates use another. We designed TrustExam to act as the unified integrity and evidence layer across that diversity, while allowing each campus to keep its preferred front-end for learning and testing.

How it worked in practice:

The exam is created in the LMS as usual.

The network selects an integrity profile for that exam type (session, retake, admission).

The candidate completes the assessment in the LMS, while TrustExam collects integrity signals and produces a review-ready report.

Instructors and committees receive outcomes plus evidence packages for contested cases.

This matters because the LMS remains the source of academic structure, while TrustExam becomes the source of integrity standardization and auditability.

Soft commercial insert

If your university network runs exams in Moodle, Canvas, or a proprietary LMS, TrustExam can add proctoring, device integrity checks, and evidence timelines without replacing your existing learning environment. Many networks start with a pilot on one faculty or campus, then scale to the full network once the review workflow is tuned.

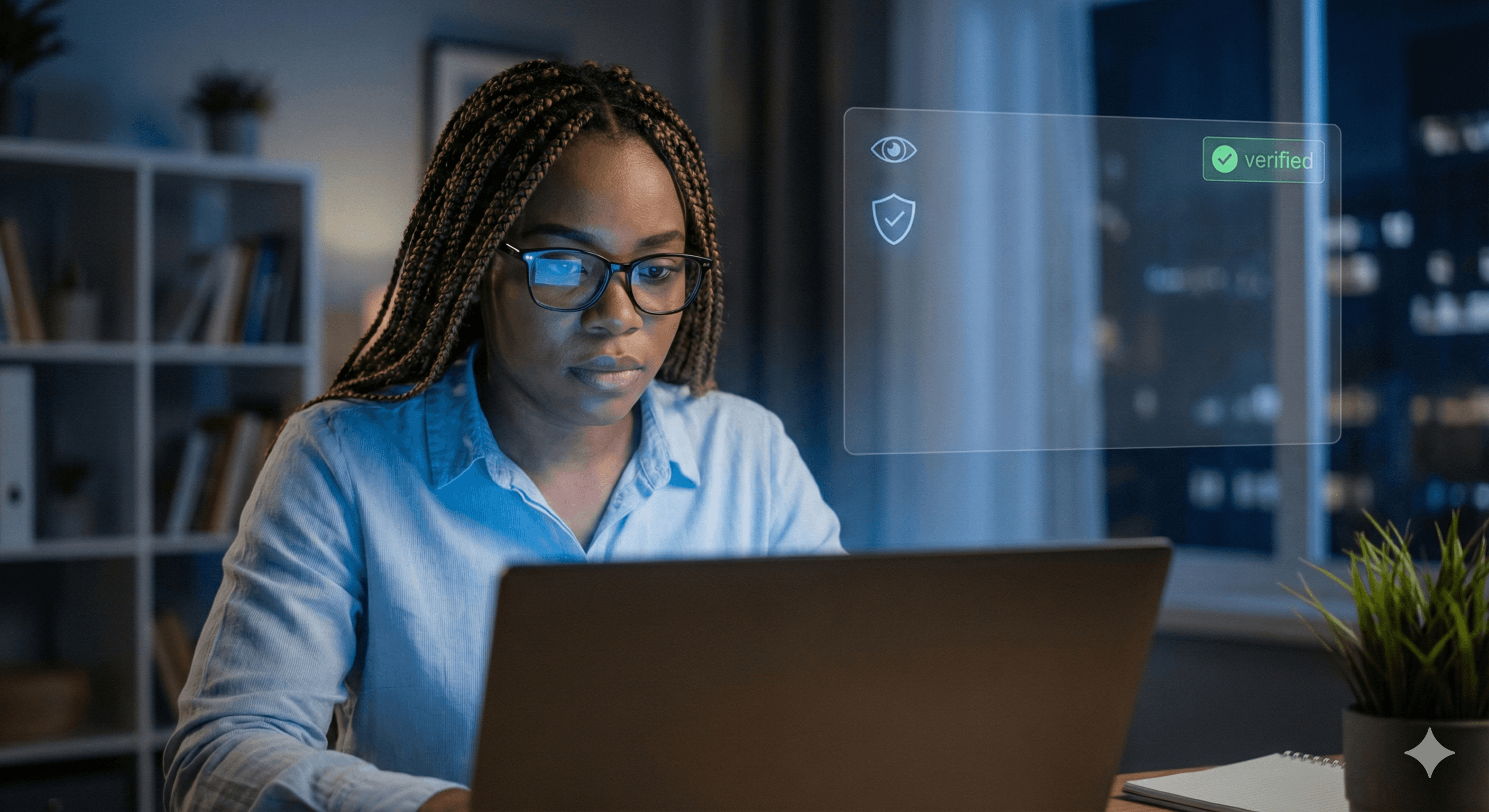

Direct delivery inside TrustExam for high-stakes and external candidate flows

Certain exam scenarios are difficult to secure inside an LMS workflow, especially when candidates are not enrolled students or when the institution needs stronger control and evidence. In these cases, the exam runs end-to-end in TrustExam: test delivery, proctoring, integrity signals, risk scoring, and reporting.

The network used direct delivery for:

Admission exams and selection campaigns

Network-wide standardized exams across multiple universities

Retakes with elevated dispute risk

Exams for external candidates without LMS accounts

The advantage is a single controlled environment with consistent rules, consistent evidence, and clearer operations under peak load.

[IMAGE: A candidate taking an admission exam at home with a readiness check screen and a clear rules and consent notice. Caption: "Admission exams require clear rules and defensible evidence, not aggressive friction." Style: documentary/real-life]

What we delivered across core university scenarios

Exam sessions (high volume, limited reviewer capacity)

The main challenge during exam sessions is scale. Thousands of candidates sit assessments within narrow windows. If every recording must be watched manually, committees burn out and decisions become inconsistent.

We implemented:

Integrity profiles by exam type, aligned across campuses

Risk-based prioritization for review, so committees focus on the most relevant cases

Standardized reports and evidence timelines across the network

The outcome for academic committees was not a simple "flag". It was a structured, time-coded report that supported consistent decisions and reduced the time spent on appeals.

Admission exams (high stakes, higher pressure)

Admission windows have a different threat profile:

Increased incentive for impersonation and proxy test taking

Higher exposure to organized cheating attempts

Peak-day load and strict fairness expectations

For these flows, the network increased:

Identity verification steps aligned with policy and local rules

Environment and device controls appropriate to the stakes

Evidence depth for admissions committees and dispute resolution

The focus was not punitive automation. The focus was defensible integrity decisions supported by clear evidence.

Why this scaled across a network, not only a single university

In a multi-campus environment, the biggest integrity risk is inconsistency. If one campus is strict and another is permissive, ranking and pass decisions become vulnerable. So we designed the program around standardization:

Integrity profiles shared across the network

A consistent review and appeals workflow

Roles and permissions aligned to governance needs (instructor, committee, audit, campus admin)

The goal was simple. Different campuses can keep different academic structures, but integrity rules and evidence formats must be consistent across the network.

What “integrity” means in practice: evidence, not automatic punishment

We intentionally designed the process with human oversight:

The system captures multi-signal events

It produces a time-coded timeline of relevant moments

It supports committee review and structured decision-making

It enables consistent appeals handling

This approach protects fairness. It also reduces legal and reputational risk for the institution.

Scale proof: 1.5 million exams delivered

Across university use cases in production, we have supported approximately 1.5 million exams:

Regular exam sessions

Large retake waves

Admission campaigns and selection exams

Blended delivery through LMS integrations and direct exam delivery

The value of this scale is operational maturity. At this volume, real failure modes appear: peak load, infrastructure variance, edge cases, and disputes. A system must perform in production, not only in a demo.

[IMAGE: A reviewer viewing a report that includes a timeline of events, short media snippets, and a final "review recommended" outcome. Caption: "Standardized evidence packets reduce disputes and speed up appeals." Style: documentary/real-life]

Governance: privacy, transparency, retention, and appeals

University networks often include minors, international students, and cross-border programs. That raises governance requirements.

In this deployment, governance was treated as part of integrity:

Clear participant notices and transparent rules

Defined retention schedules and controlled deletion

Role-based access to recordings and evidence

Human-in-the-loop decisions for high-impact outcomes

A structured appeals process with consistent evidence formats

For external framing, many institutions align their approach with widely recognized guidance such as NIST Digital Identity Guidelines and established university academic integrity policies. For biometric and presentation attack concepts, ISO/IEC 30107-3 is often referenced in the broader industry. The point is not to copy a document. The point is to show that integrity controls are designed with transparency and oversight.

What this means for potential TrustExam customers

If your network already operates in Moodle, Canvas, a proprietary LMS, or a mix of systems, TrustExam can serve as the unified integrity and evidence layer without disrupting learning workflows. If you run high-stakes flows such as admissions, scholarship selection, or external certification, TrustExam can also deliver the exam directly with stronger operational control and audit-ready reporting.

Soft commercial insert

If you want to evaluate this model for your institution, start with a short pilot: one campus, one exam type, and a clear set of KPIs for workload, disputes, completion rate, and appeals. TrustExam.ai online proctoring platform provides an integrity stack built for scale and governance: https://trustexam.ai

FAQ

Do we need to replace our LMS to use TrustExam?

No. Many networks use TrustExam as an integrity layer integrated with Moodle, Canvas, or proprietary LMS environments.When should we run exams directly in TrustExam instead of inside the LMS?

Direct delivery is often better for admissions, external candidates, network-wide standardized exams, and cases where you need stronger evidence and consistent rules across campuses.How do you avoid over-penalizing students?

We use risk-based review and evidence timelines. High-impact decisions remain human-reviewed, with a defined appeals process.What should a university prepare before starting a pilot?

Define exam rules, violation criteria, roles, retention expectations, and the intended review and appeals workflow. The technology follows governance, not the other way around.

Orken Rakhmatulla

Head of Education

Share

News

Secure Testing System for High-Stakes Exams - TrustExam.ai Testing Platform

Jan 12, 2026

Tutorials

Integrate TrustExam.ai into Your Existing LMS (Moodle, Canvas, Custom Portals): A Practical Guide

Jan 12, 2026

Insights

Tech Orda IT Certification Assessments in Kazakhstan: How TrustExam.ai Helped Deliver Secure, Scalable Remote Testing

Jan 8, 2026